The Robotics Roundup is a weekly newspost going over some of the most exciting developments in robotics over the past week.

In today’s edition we have:

- ‘Experiential robotics’ on display at Northeastern

- New method uses crowdsourced feedback to help train robots

- Tiny living robots made from human cells surprise scientists

- A color-based sensor to emulate skin’s sensitivity

- IIEE Video Friday: Punch-Out

‘Experiential robotics’ on display at Northeastern.

The Institute for Experiential Robotics at Northeastern University is conducting transformative research in its new EXP building, developing innovative robots with practical applications. Notably, researchers are working on robots that can handle chopsticks for precise manipulation and a snake-like robot designed to operate in sandy environments. One project, funded by a National Science Foundation grant, involves the development of robotics applications for the seafood packaging industry, such as the Voxel Enabled Robotic Assistant (VERA) system. VERA features an assistive robotic table with embedded robotic cubes, or voxels, that help in moving items. Another notable project is the Crater Observing Bio-inspired Rolling Articulator (COBRA), designed for NASA’s BIG Idea Challenge to navigate difficult terrains. Undergraduate and postgraduate students are actively involved in these projects, taking advantage of the state-of-the-art lab spaces. The new institute currently hosts 20 faculty members working across various disciplines. The aim of the institute is to enrich human experiences through the meaningful development and deployment of robotics technology.

New method uses crowdsourced feedback to help train robots

Researchers from MIT, Harvard University, and the University of Washington have developed a new reinforcement learning approach for AI that leverages crowdsourced feedback from non-experts to guide the AI agent in its learning. Unlike traditional approaches that rely on a carefully designed reward function crafted by human experts, this new method, called HuGE (Human Guided Exploration), allows the AI agent to learn more quickly despite the potential for errors in the feedback data. This approach separates the learning process into two parts: the goal selector algorithm, which is updated with human feedback and guides the agent’s exploration, and the agent’s self-supervised exploration. Preliminary tests of the method, both simulated and real-world, show that HuGE helps agents learn to achieve their set goals faster than other methods.

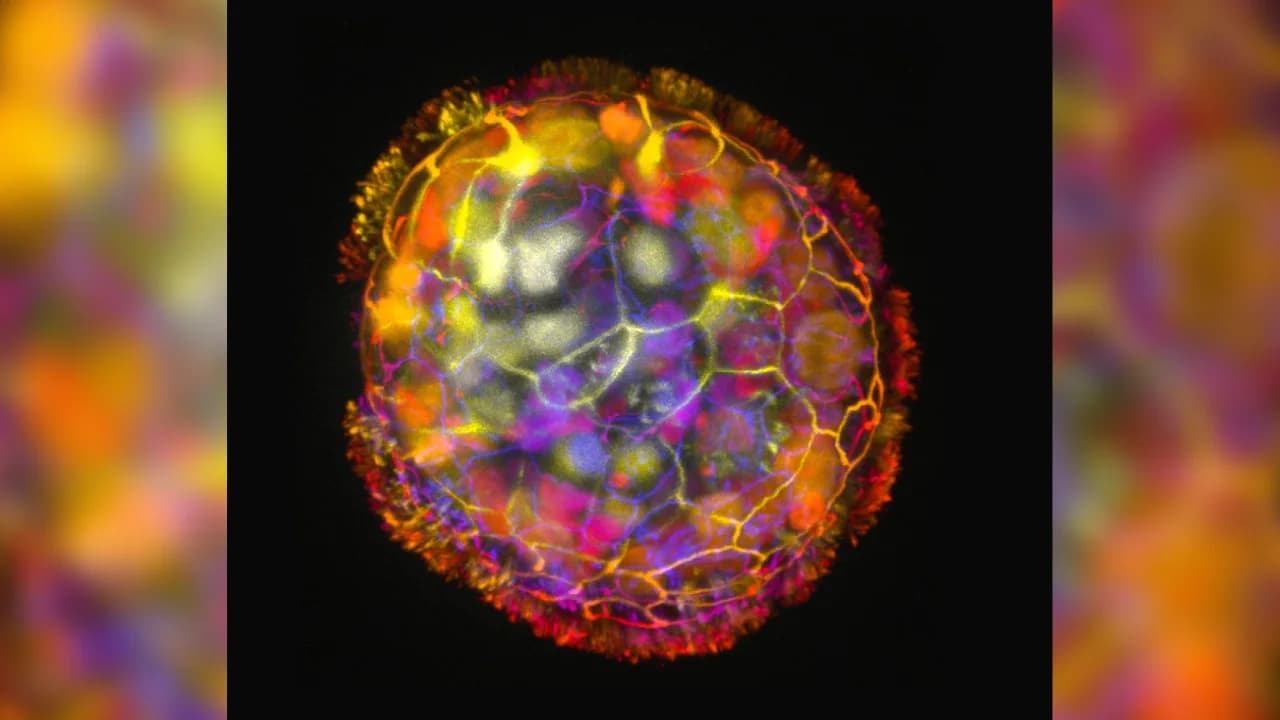

Tiny living robots made from human cells surprise scientists

Researchers from Tufts University and Harvard University’s Wyss Institute have developed tiny living robots, called anthrobots, using human tracheal cells. Unlike the previously created xenobots, which were developed from frog stem cells, the anthrobots were grown from a single cell and can self-assemble, a unique feature among biological robots. The anthrobots have the ability to move around, thanks to hair-like projections called cilia on the tracheal cells which act like oars. Initial experiments have shown promising results, with the anthrobots encouraging growth in damaged human neurons in a lab dish. Possible medical applications include healing wounds or damaged tissue, although the research is in its early stages. There are no safety or ethical concerns, as the anthrobots are neither derived from human embryos nor genetically modified, and they naturally biodegrade after a few weeks.

A color-based sensor to emulate skin’s sensitivity

Researchers from EPFL’s Reconfigurable Robotics Lab (RRL) have developed a multi-stimuli sensor for soft robots and wearable technologies using color changes. The device, called ChromoSense, contains three sections dyed red, green, and blue within a translucent rubber cylinder. An LED sends light through the core of the device, and a miniaturized spectral meter at the bottom detects changes in the light’s path through the colors as the device bends or stretches. Additionally, a thermosensitive section with a special dye can detect temperature changes. Unlike other robotic technologies, ChromoSense relies on simple mechanical structure and color instead of cameras, making it potentially suitable for inexpensive mass production. The technology could be used in assistive technologies, athletic gear, and daily wearables. However, the team is still working on decoupling simultaneous stimuli. Future plans include experimenting with different formats for ChromoSense.

IIEE Video Friday: Punch-Out

IEEE Spectrum’s Video Friday collection of robotics videos, showcasing advancements in the field. Highlights include IHMC and Boardwalk Robotics demonstrating the utility of bipedal humanoid robots, the successful 59th flight of NASA’s Ingenuity Helicopter on Mars, and the development of a parallel wire-driven leg structure that enables a robot to achieve both continuous jumping and high jumping. Other videos reveal technological advances, such as a robot named Cassie Blue navigating a moving walkway, a novel sensor called ChromoSense that perceives multiple stimuli at once, and a small crawling robot called SLOT designed for pipe inspection and confined space exploration. Furthermore, the MIT CSAIL team introduced an algorithm for robot navigation in cluttered environments, and a humanoid named RH5 accomplished high-speed dynamic walking.