The Robotics Roundup is a weekly newspost going over some of the most exciting developments in robotics over the past week.

In today’s edition we have:

- AI gives robot a push to identify and remember objects

- Do robots have to be human-like for us to trust them?

- Targeted stiffening yields more efficient soft robot arms

- Microrobots for the study of cells

- A technique to facilitate the robotic manipulation of crumpled cloths

AI gives robot a push to identify and remember objects

UT Dallas computer scientists have developed a new approach to help robots recognize and remember objects. Using a Fetch Robotics mobile manipulator robot named Ramp, the researchers trained the robot to recognize objects through repeated interactions. Previous approaches relied on a single push or grasp by the robot, but the new system allows the robot to push objects multiple times to collect a sequence of images and segment the objects. The researchers presented their findings at the Robotics: Science and Systems conference. The technology aims to help robots detect various objects and generalize similar versions of common items. The researchers plan to improve other functions, such as planning and control, for future applications like sorting recycled materials.

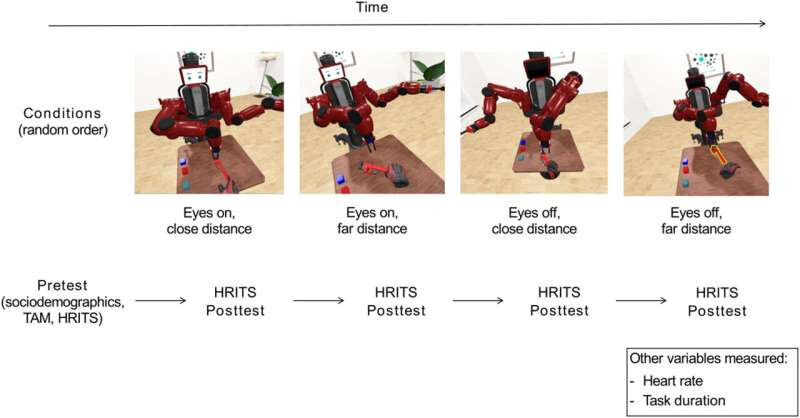

Do robots have to be human-like for us to trust them?

A recent study examined human trust when collaborating with eyed and non-eyed robots. The research found that humans may not require human-like machines with eyes to trust and work with them. In fact, participants in the study seemed to collaborate better with machine-like, eyeless robots. Objective measures, such as task completion time and pupil size, indicated that participants were more comfortable cooperating with non-eyed robots. This suggests that anthropomorphism may not be a beneficial feature for collaborative robots. The study was conducted by researchers from Ruhr University Bochum, the University of Coimbra, and the University of Trás-os-Montes and Alto Douro.

Targeted stiffening yields more efficient soft robot arms

Researchers at Harvard University and MIT are developing soft robot arms with improved capabilities for handling delicate objects. The use of passive compliance allows the robots to adjust their form and pressure to accommodate various tasks, making interactions with humans safer and more efficient. However, passive compliance limits the payload capacity of the robots. To overcome this, the researchers have introduced localized body stiffening using variable stiffness actuators. This allows the arms to strengthen and handle greater payloads while still maintaining flexibility. The development of these soft robot arms is expected to enhance their abilities in tasks such as object manipulation and navigating cluttered environments.

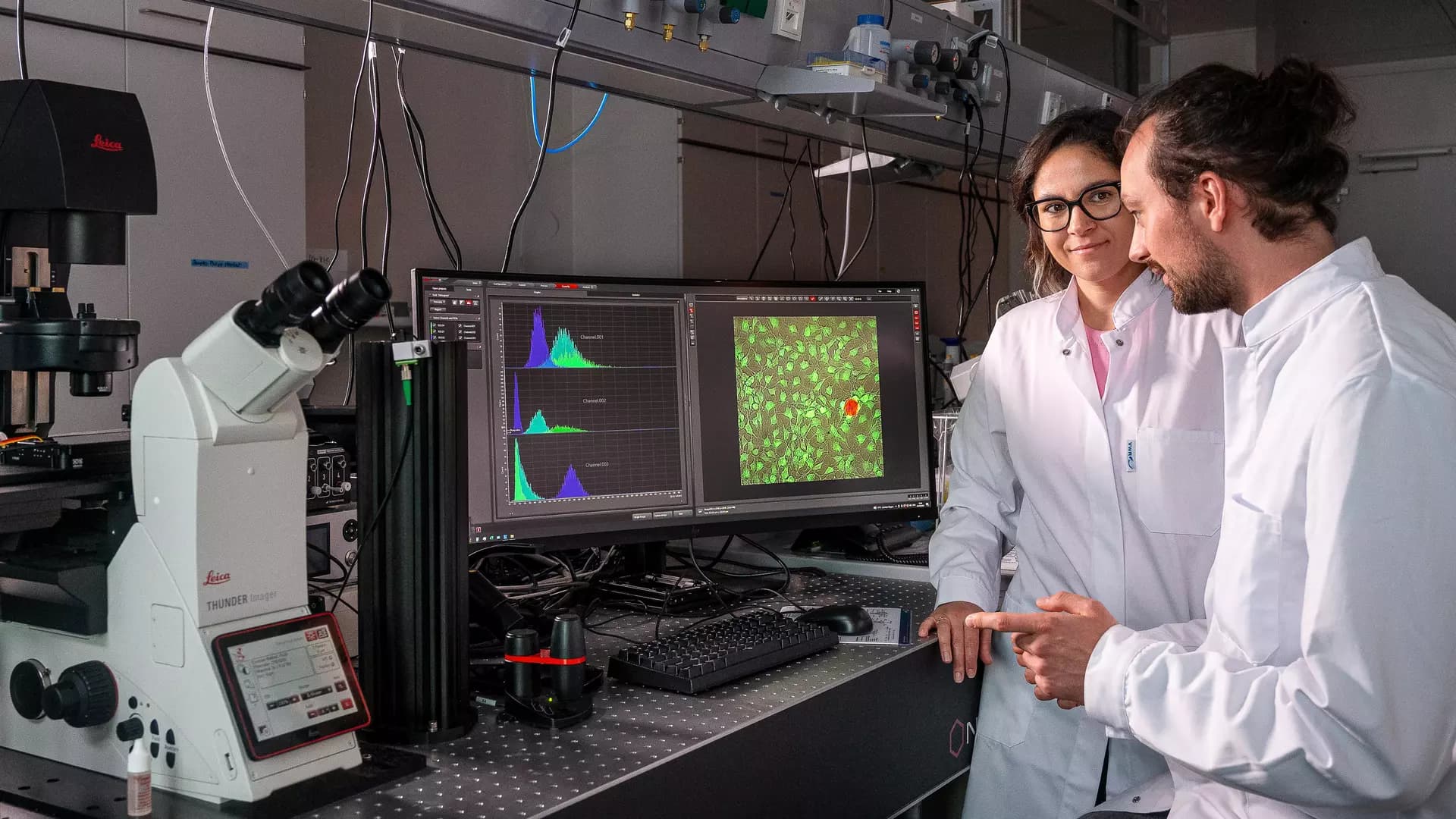

Microrobots for the study of cells

Researchers led by Prof. Berna Özkale Edelmann have developed microbots that are half as thick as a human hair made of gold nanorods and fluorescent dye. The microbots, called TACSI microbots, are part of a system that can navigate through groups of cells and stimulate individual cells through temperature changes. Researchers believe that small temperature changes can influence cell processes and are investigating whether thermal stimulation can be used to heal wounds and treat diseases like cancer.

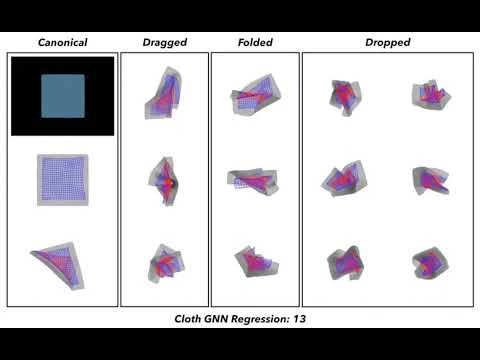

A technique to facilitate the robotic manipulation of crumpled cloths

Researchers at ETH Zurich have developed a computational technique to create visual representations of crumpled cloths, which could help robots effectively grasp and manipulate them. The technique uses single-view human body reconstruction to reconstruct cloths from top-view depth observations. The researchers trained their model using a dataset of synthetic images and labeled real-world cloth images. The model accurately predicts the positions and visibility of cloth vertices, enabling efficient manipulations. In simulations and experiments using the ABB YuMi robot, the model successfully guided the robot’s movements in holding and manipulating various cloths. The researchers have made their datasets and code open-source, potentially advancing the capabilities of robots designed to assist with household chores.