Hi everyone! We are BANSHEE UAV, a multi-year research project team at California State Polytechnic University, Pomona (Cal Poly Pomona) College of Engineering. We have again teamed up with ROBOTIS this summer to utilize their Dynamixel motor technology. Our team gratefully thanks ROBOTIS for their continued sponsorship, and hopes the community will appreciate and give feedback on the accomplishments this summer!

I am Harris and this is my 2nd year with BANSHEE UAV. I will help keep the community updated with our progress with (hopefully!) bi-weekly posts on this thread. Linked below are some of the previous summer works and the project webpage!

Summer 2021: Cal Poly Pomona Label Robot!

Summer 2022: Cal Poly Pomona's Battery Vending Machine

Project Webpage: https://www.bansheeuav.tech/

Commonly, laboratories and workspaces become pretty messy throughout a semester. We hope to utilize both hardware and software solutions to sort and clean-up workspaces within the defined environment. As the summer continues, I’ll be sure to update the project goals and implementations to better reflect the state of the design processes.

6/12/23 Update

We attended the first ROBOTIS collaboration meeting and discussed some of the implementations. In addition, we organized our team into two separate sections.

-

Testing Team

The Testing Team will be responsible for the hardware aspect of the project. All majors are interested to join the team, but they will require in-person access to the lab.

-

Software Team

The Software Team will consist of the rest of the team unable to attend in person. Currently, they will be responsible for researching fiducial marker detection options and researching more about interacting with the Dynamixel motors.

Thank you, everyone, for the community’s patience as we again start our research project. Any feedback is appreciated!

2 Likes

Hello! I’m here for the second update as the team finishes some of the research and continues to specify the scope of the project! To encourage the open-source community, we have done our part and continue to update our repo onto Github.

6/26/23 Update:

Hardware Team:

We started off the first few days by getting up to speed about the AruCo markers. Fig 1 below show the progress to detect multiple AruCo markers at the same time. In addition, the hardware team has gotten their feet warm through looking through and started up the robotics arm, catching up to some of the progress made during the Summer 2022. We hope to continue our previous works this session and utilize the ROBOTIS OpenManipulator-X.

Goals:

Get the software to communicate with each other (AR Marker Detection and distance) and see how we can use kinematics to pickup the square blocks.

Use the code provided from the software team and pickup square blocks with an AR Marker plaed on top.

Software Team:

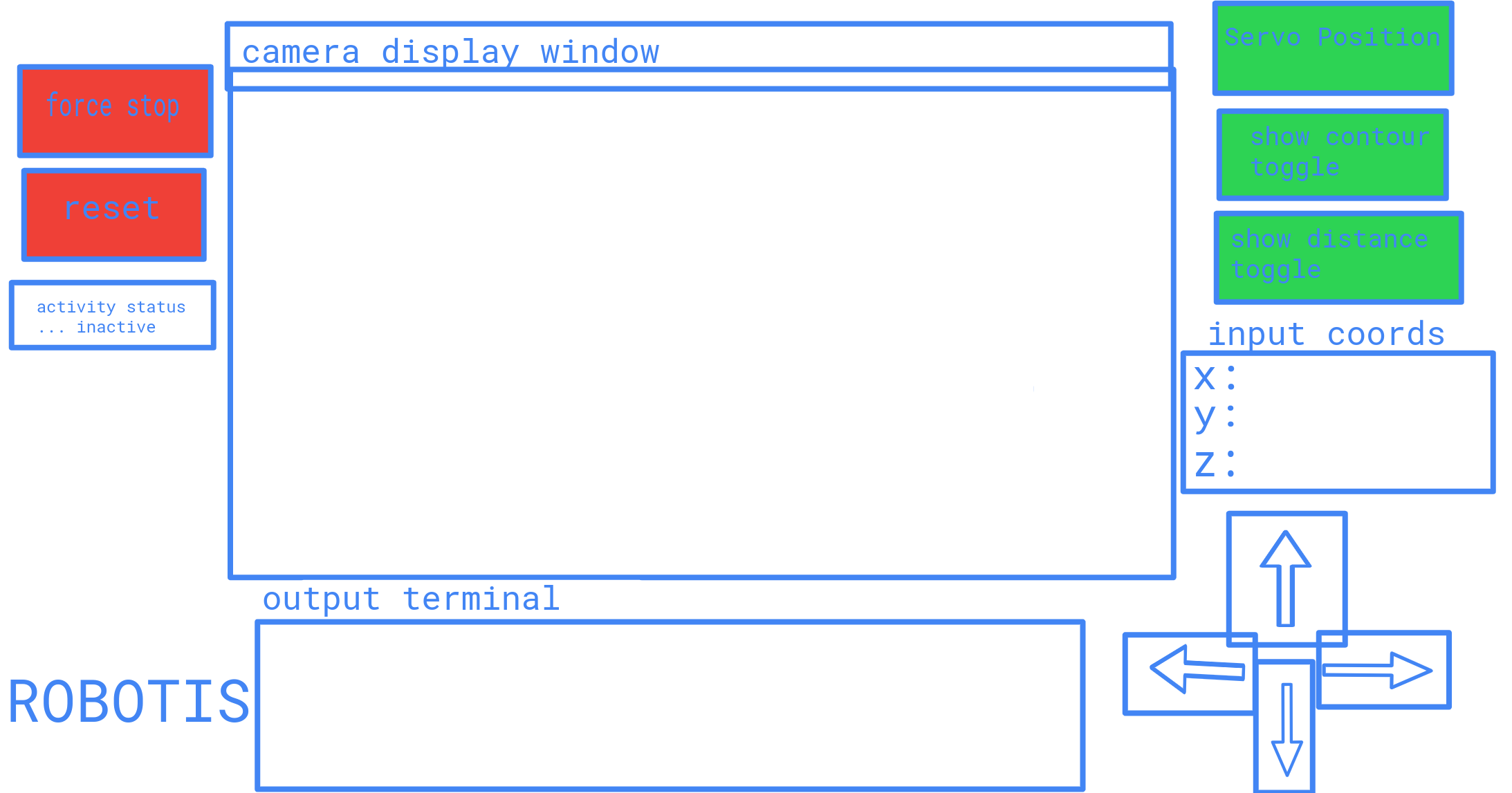

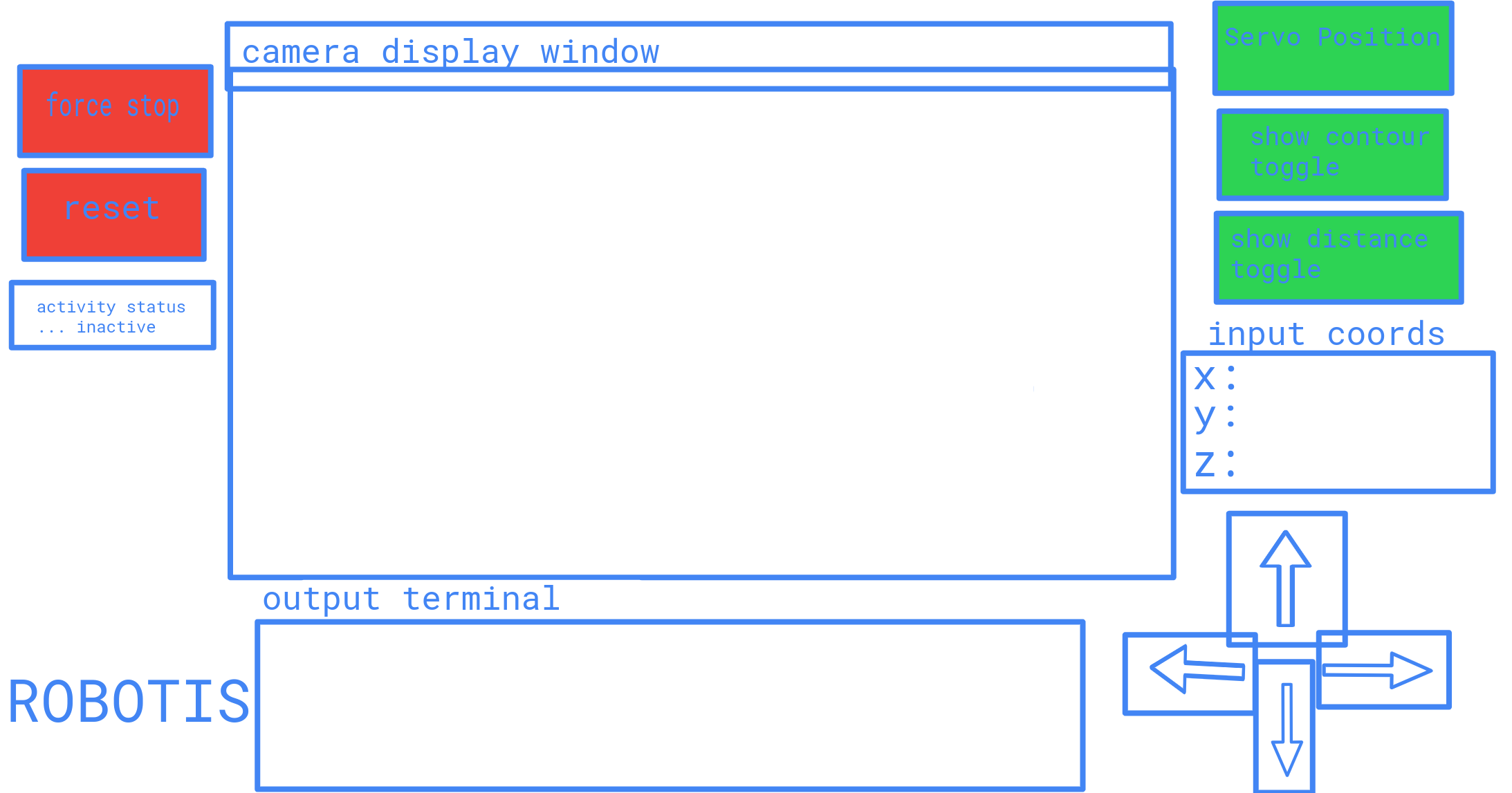

As the Software Team gets comfortable with the previous team’s works, the team is considering implementing the YOLO API, which has quite modern object detection and ease-of-access through it’s documentation. As the team moves forward with decisions, many members in the software team are testing the program and contributing to the linked Github. In addition, the Software Team is assisting the Hardware Team with the implemention of the AruCo markers, which is seen through Fig 1. Fig 2 shows a rough sketch of the GUI we are planning to create, with notable features including the camera stream and the movement controls.

Goals:

Combining YOLO and OpenManipulator-X. AR Marker Detection → Object Detection

Create a GUI for the camera interface and controls

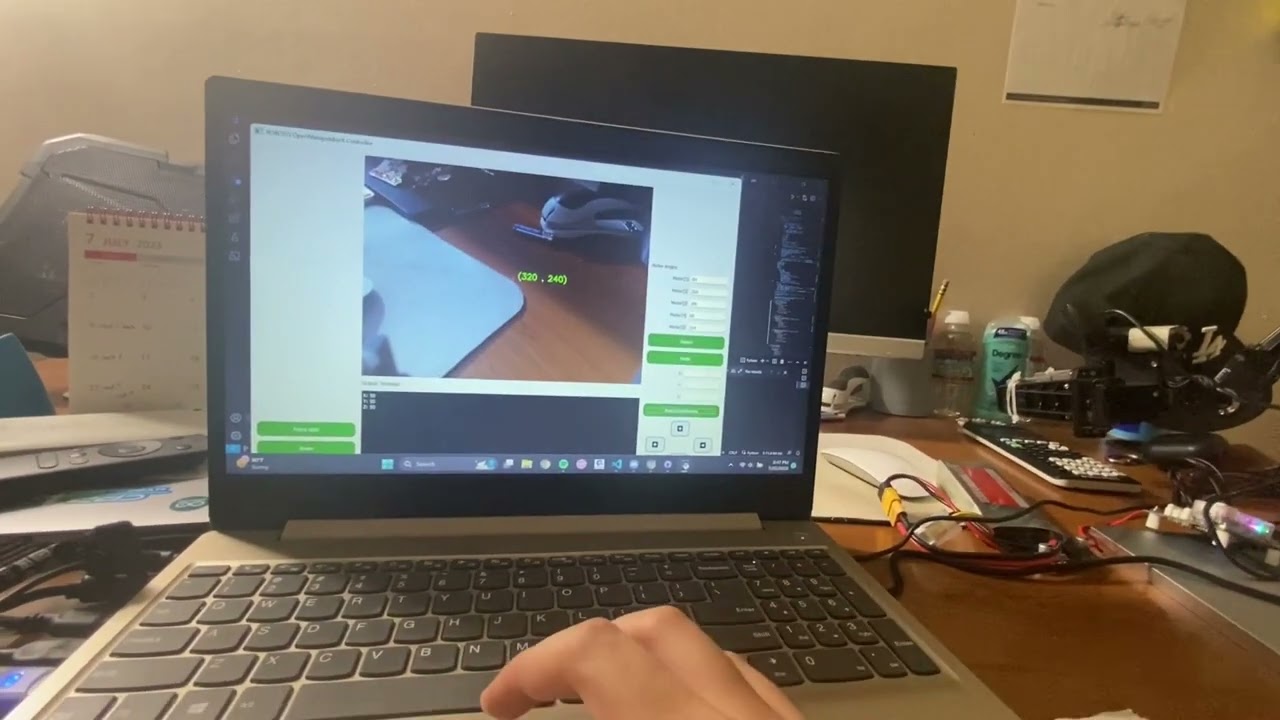

Fig 1: 7/1: Testing the robot arm at home after the meetup (6/31) at CPP

Fig 2: Draft for the Python GUI created by the Software Team

Any feedback is appreciated for the project. Thank you for the read, and I’ll see you guys again in two weeks!

2 Likes

Good morning! I’m here for the third update of the summer! The team is hard at work both in-person and remotely with our goal to achieve autonomous movement across the ROBOTIS OpenManipulator-X. Without further to do, let’s jump in!

7/10 Update:

Hardware Team:

After the initial run of the arm, the team is excited to perform the kinematic calculations to find the initial and end positions. From the math, we’re able to demonstrate both Y-axis and Z-axis movement provided in Fig 1, Fig 2, and Fig 3. Our hardware team is making great progress, and we’re excited to continue working in the lab for the following two weeks.

Goals:

Fix the discrete movement in the demonstration videos. A possibly homogenous mix of hardware and software issues may be causing the issue, and the team is working to provide for continuous motion.

Integrate the technology with the GUI.

Software Team:

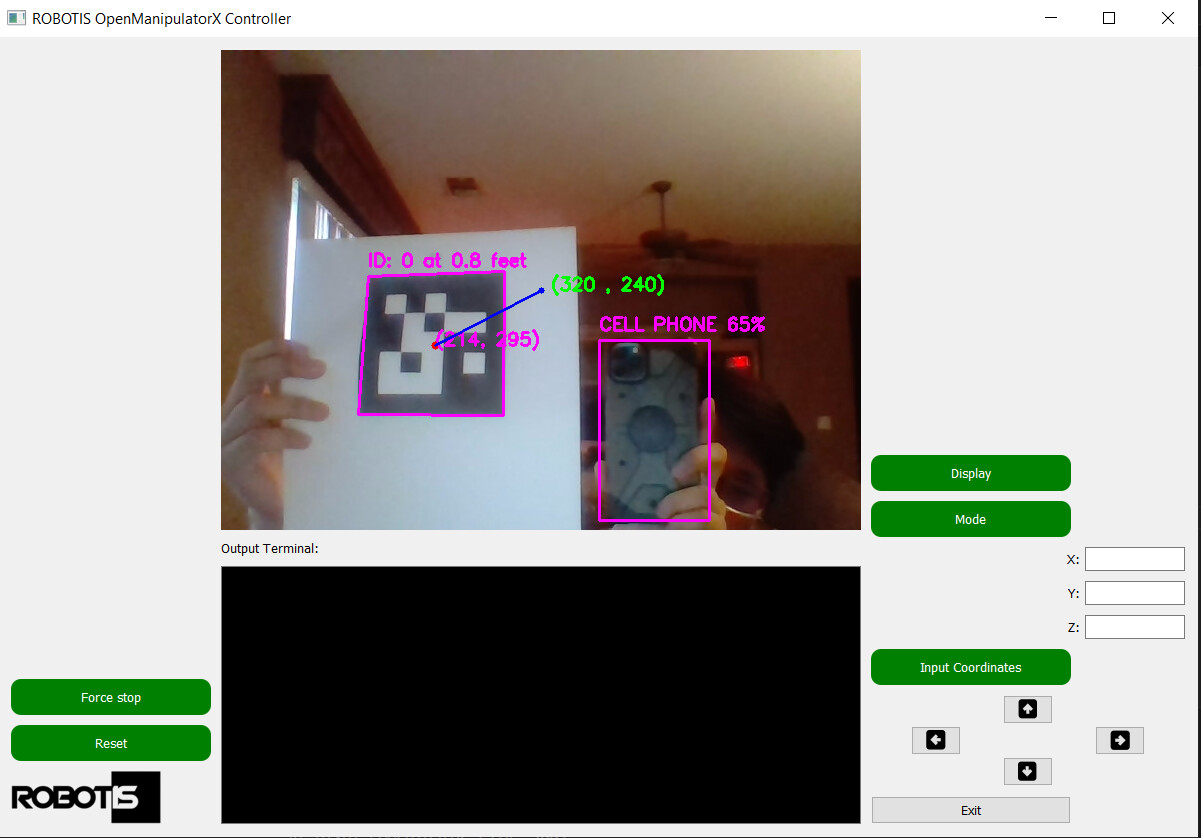

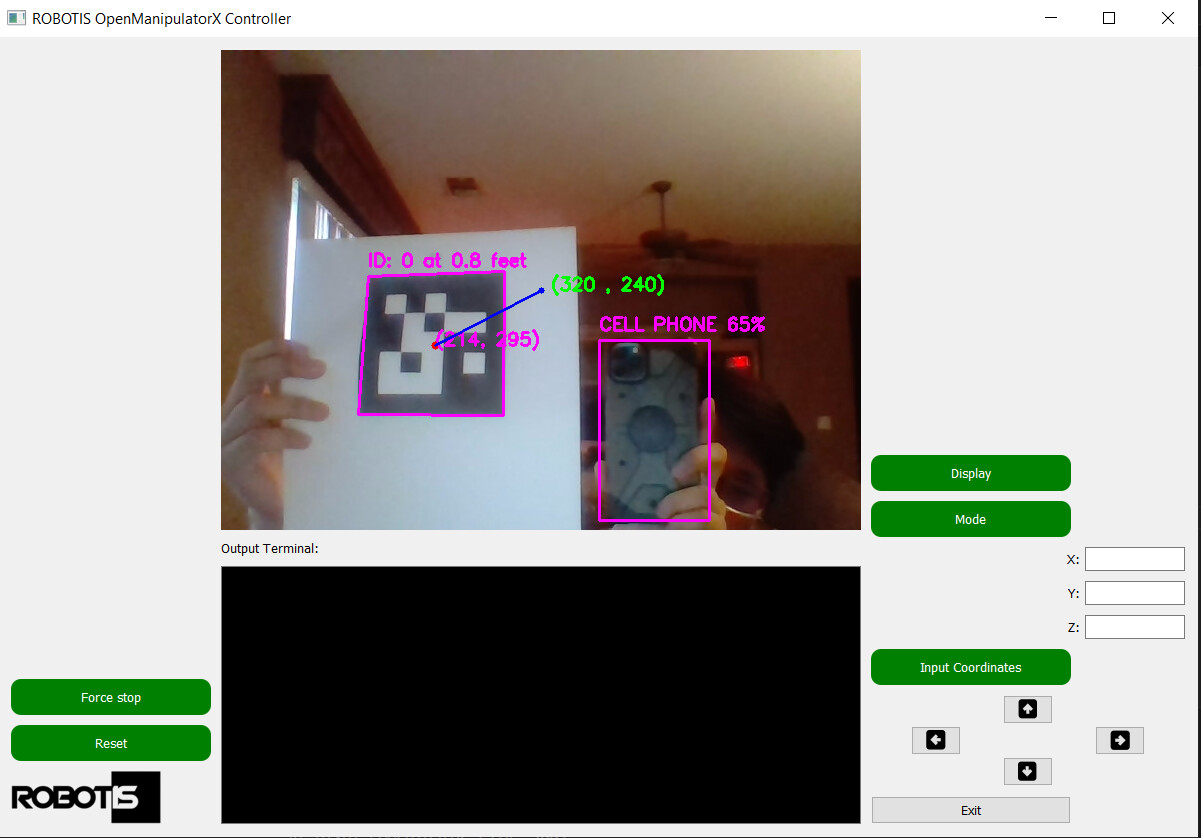

The team has been hard at work refining the design of the GUI with PyQt5. Fig 4 demonstrates the working camera interface, along with some of the proposed button locations, including an option to manually input coordinates for the robotic arm to head toward. The GUI also includes the camera feedback, which in this update, is running off a computer webcam. It contains both the AruCo marker tracker and the YOLO API to detect objects within the field of view.

Goals:

Map the software buttons to physical movement within the hardware.

Program the output terminal and combine it with the hardware team.

Thank you for the time, and I hope to see everyone again next week!

Fig 1: Y-Position Movement (7/10)

Fig 2: Z-Position Movement (7/10)

Fig 3: Combined Position for Y and Z Movement (7/10)

Fig 4: Combined GUI with the YOLO API (7/7)

Hello! I’m here for the fourth update this summer! The team has worked through some of the kinks from the previous weeks and is happy to release some of the results. The lines between the hardware and the software components are being blurred this week, as the team is now focusing on creating a homogenous product for demonstration.

7/24 Update:

Hardware Team:

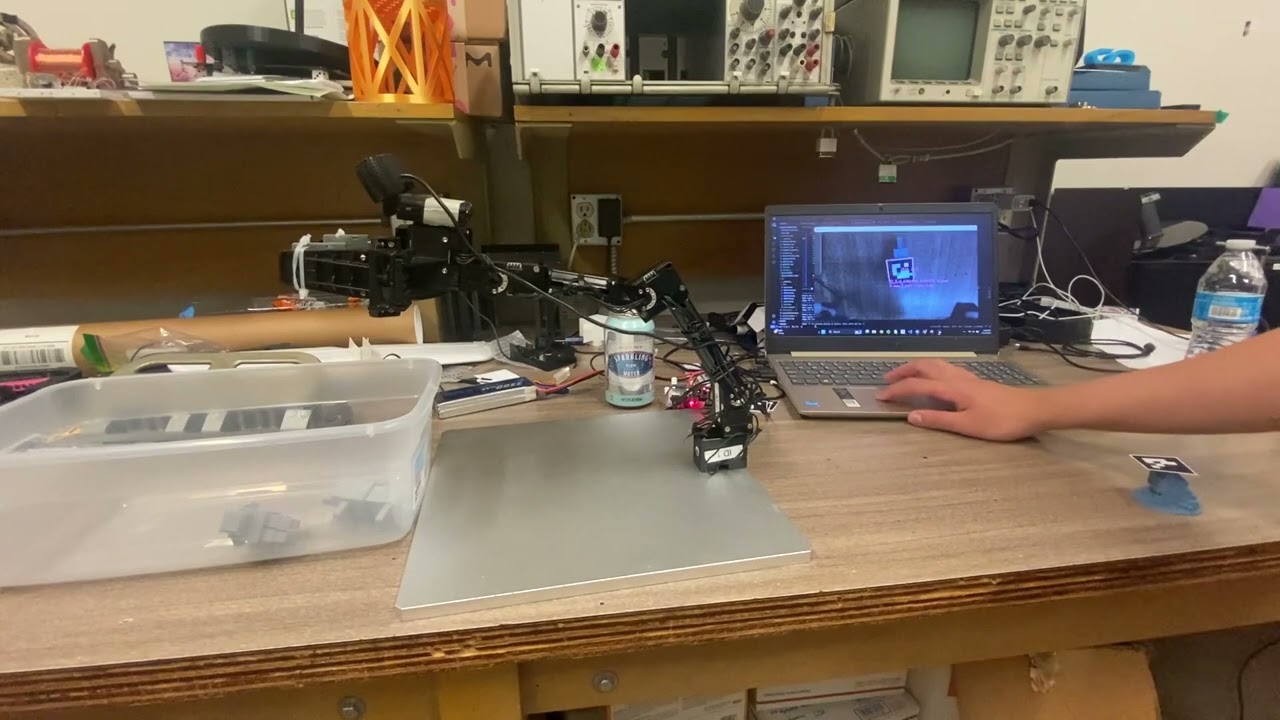

The Robotis Manipulator-X motor configuration is working properly. We are able to use all the different positions through kinematic calculations to navigate the arm into a certain coordinate. To decrease wobble, we added a metal sheet to mount the ROBOTIS arm. To create objects for pick-up, we are 3D printing defined shapes with an attached AruCo marker.

Software Team:

The software team was able to clean up much of the design with the graphical interface. The GUI is now able to control the ROBOTIS arm through a numeric entry pad or the arrows, as demonstrated in Fig 1. The software team and hardware team worked together to work on the controls for the robotic arm, then demonstrated the ability to pick up and drop off AruCo markers into a specified bin (Fig 2)! Further on, the software team is focused on working with machine learning packages to automatically find objects without the use of the AruCo markers and expand the range of operations.

Fig 1: Entering coordinates through the GUI

Fig 2: Picking up Three Separate Objects through the Image Recognition

Thank you to ROBOTIS for their support and technical expertise over the last number of summers. Thank you to Diamond Bar High School for providing the use of their 3D printing equipment. The Cal Poly Pomona Sorting Machine Summer Project 2023 is a research task under the California State Polytechnic University, Pomona Battery As iNtegrated Structure High Endurance Experimental (BANSHEE) UAV.

Project Webpage: https://www.bansheeuav.tech/