SpeechCompass: Enhancing Mobile Captioning with Diarization and Directional Guidance via Multi-Microphone Localization

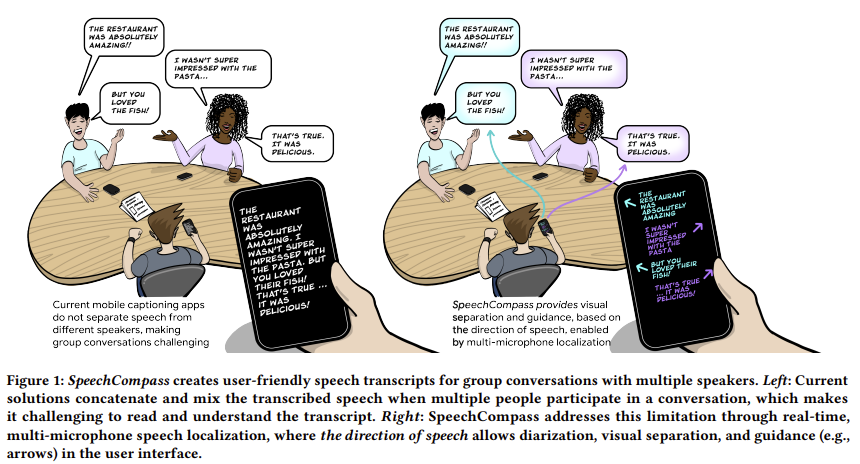

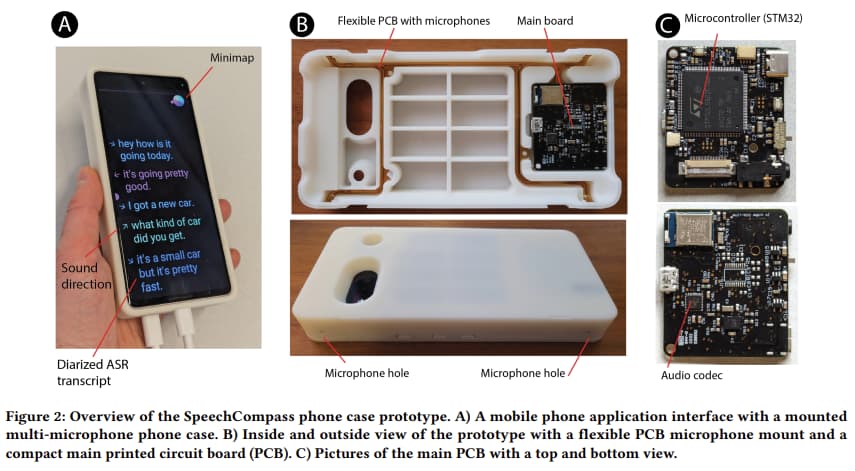

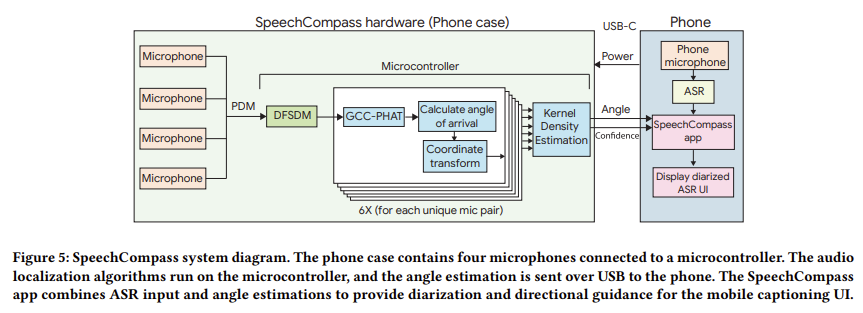

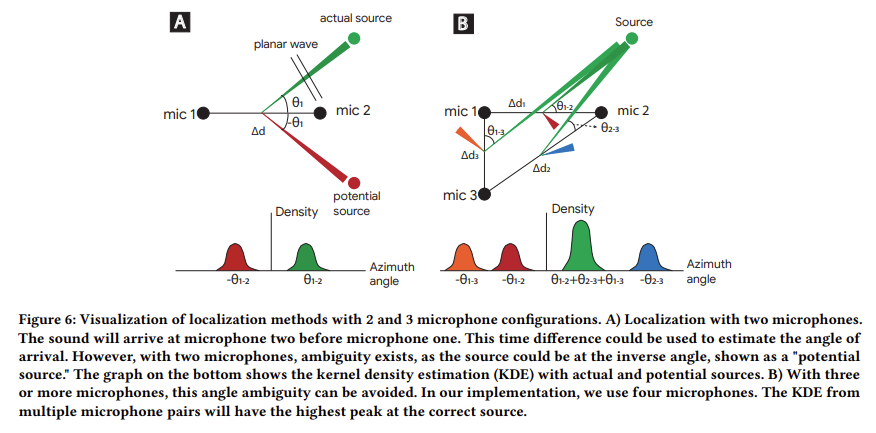

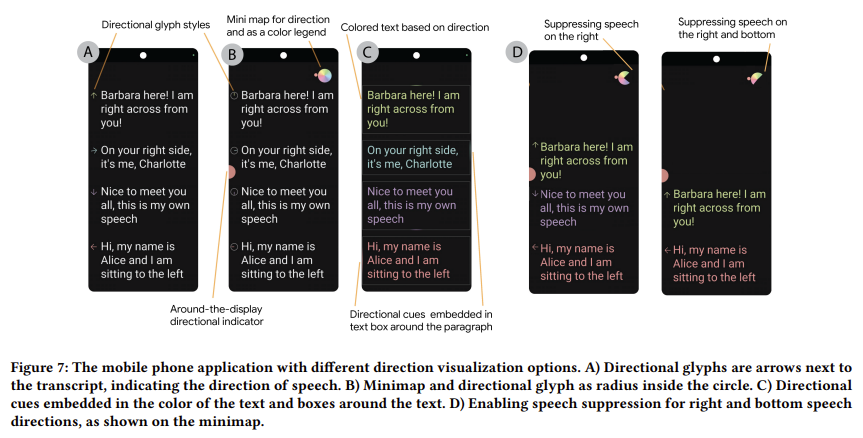

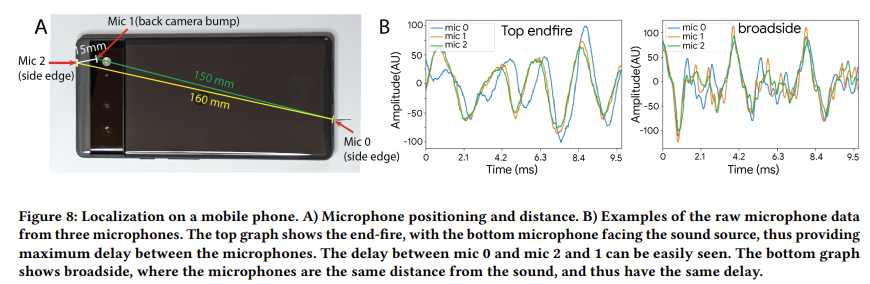

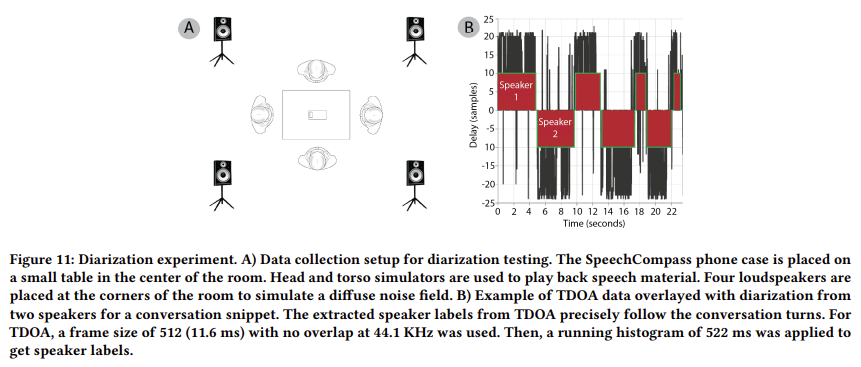

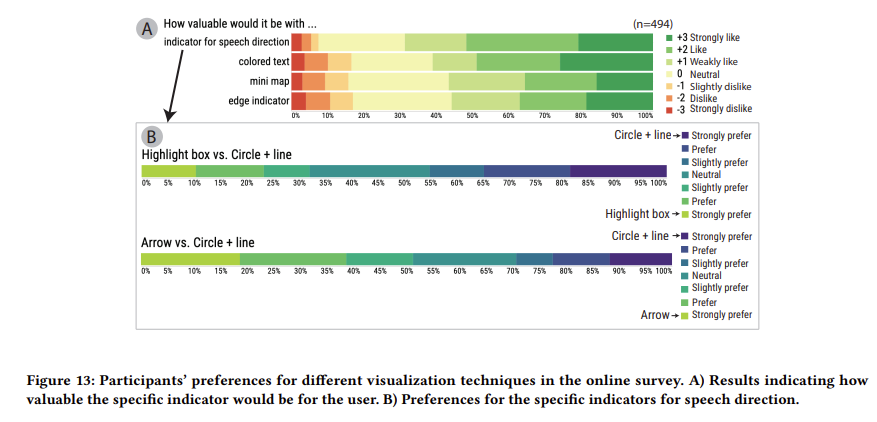

Abstract - Speech-to-text capabilities on mobile devices have proven helpful for hearing and speech accessibility, language translation, notetaking, and meeting transcripts. However, our foundational largescale survey (n=263) shows that the inability to distinguish and indicate speaker direction makes them challenging in group conversations. SpeechCompass addresses this limitation through realtime, multi-microphone speech localization, where the direction of speech allows visual separation and guidance (e.g., arrows) in the user interface. We introduce efficient real-time audio localization algorithms and custom sound perception hardware, running on a low-power microcontroller with four integrated microphones, which we characterize in technical evaluations. Informed by a largescale survey (n=494), we conducted an in-person study of group conversations with eight frequent users of mobile speech-to-text, who provided feedback on five visualization styles. The value of diarization and visualizing localization was consistent across participants, with everyone agreeing on the value and potential of directional guidance for group conversations.

CCS Concepts

• Human-centered computing → Accessibility technologies;

Human computer interaction (HCI); Mobile devices.

Keywords

Assistive technology, hearing accessibility, localization, diarization,

microphone array, captioning

Full Research Paper: https://arxiv.org/pdf/2502.08848

Full Credits Go To: Artem Dementyev, Dimitri Kanevsky, Samuel J. Yang, Mathieu Parvaix, Chiong Lai, Alex Olwal, and the Google Research Team

ROBOTIS e-Shop: www.robotis.us

DYNAMIXEL Page: www.dynamixel.com

DYNAMIXEL LinkedIn: DYNAMIXEL | LinkedIn