Powered by DYNAMIXEL X-Series

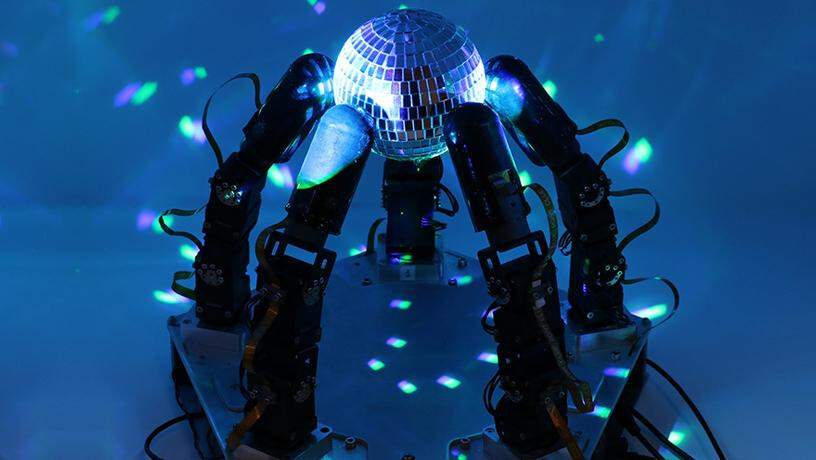

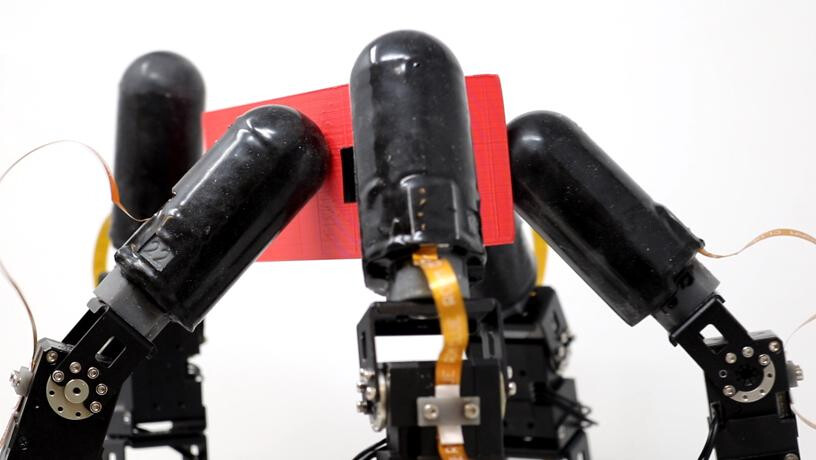

Columbia Engineers design a robot hand that is the first device of its kind to join advanced sense of touch with motor-learning algorithms — it doesn’t rely on vision to manipulate objects

Dexterous Manipulation with Tactile Fingers

Abstract—

In this paper, we present a novel method for

achieving dexterous manipulation of complex objects, while

simultaneously securing the object without the use of passive

support surfaces. We posit that a key difficulty for training such

policies in a Reinforcement Learning framework is the difficulty

of exploring the problem state space, as the accessible regions

of this space form a complex structure along manifolds of a

high-dimensional space. To address this challenge, we use two

versions of the non-holonomic Rapidly-Exploring Random Trees

algorithm; one version is more general, but requires explicit

use of the environment’s transition function, while the second

version uses manipulation-specific kinematic constraints to attain

better sample efficiency. In both cases, we use states found via

sampling-based exploration to generate reset distributions that

enable training control policies under full dynamic constraints via

model-free Reinforcement Learning. We show that these policies

are effective at manipulation problems of higher difficulty than

previously shown, and also transfer effectively to real robots.

A number of example videos can also be found on the project

website: sbrl.cs.columbia.edu

All credit goes to Columbia University: Gagan Khandate∗†, Siqi Shang∗†, Eric T. Chang‡

, Tristan Luca Saidi†

, Yang Liu‡

Seth Matthew Dennis‡

, Johnson Adams‡

and Matei Ciocarlie‡

†Dept. of Computer Science ‡Dept. of Mechanical Engineering ∗

joint first authorship

Columbia University, New York, NY 10027, USA

Corresponding email: gagank@cs.columbia.edu

For Full Paper: https://arxiv.org/pdf/2303.03486.pdf